Autonomy in off-road highly dynamic environments, without network access

Augment vision and perception in highly dynamic environments.

Semantic labelling

Guidelines

Dynamic routing

Network free navigation

Object avoidance

Proximity detection

Navigate in dirty conditions

Map maintenance

Small spaces

Coming next

Autonomous job prioritization. Enabling a machine to independently and automatically prioritize its most important tasks and dynamically route in real time.

Try the Opteran Mind

We provided several approaches to test our general purpose autonomy.

Simulation

Our algorithms integrated with the Unreal Engine allow you to test the Opteran Mind in simulations that represent your business needs.

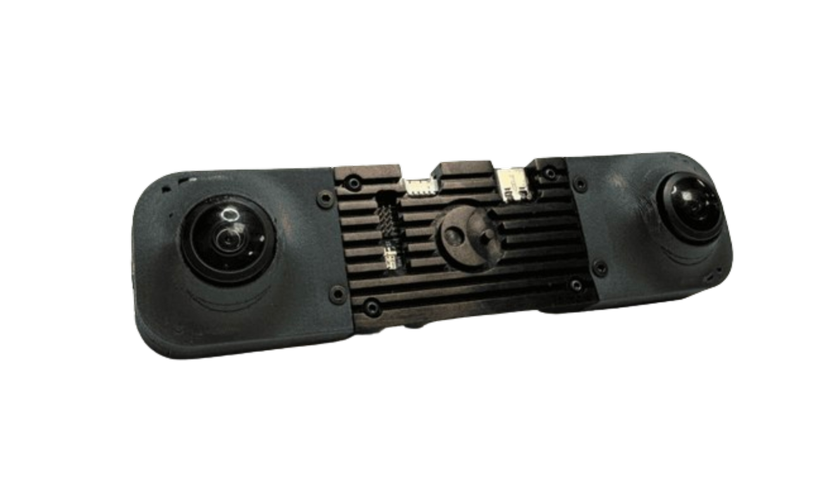

Reference hardware

The Opteran Development Kit (ODK) with integrated software, hardware and pre-calibrated cameras allows you to integrate Opteran algorithms directly into your machine. Simply, plug in a USB or Ethernet to access PX4 over Mavlink or ROS APIs and get going.

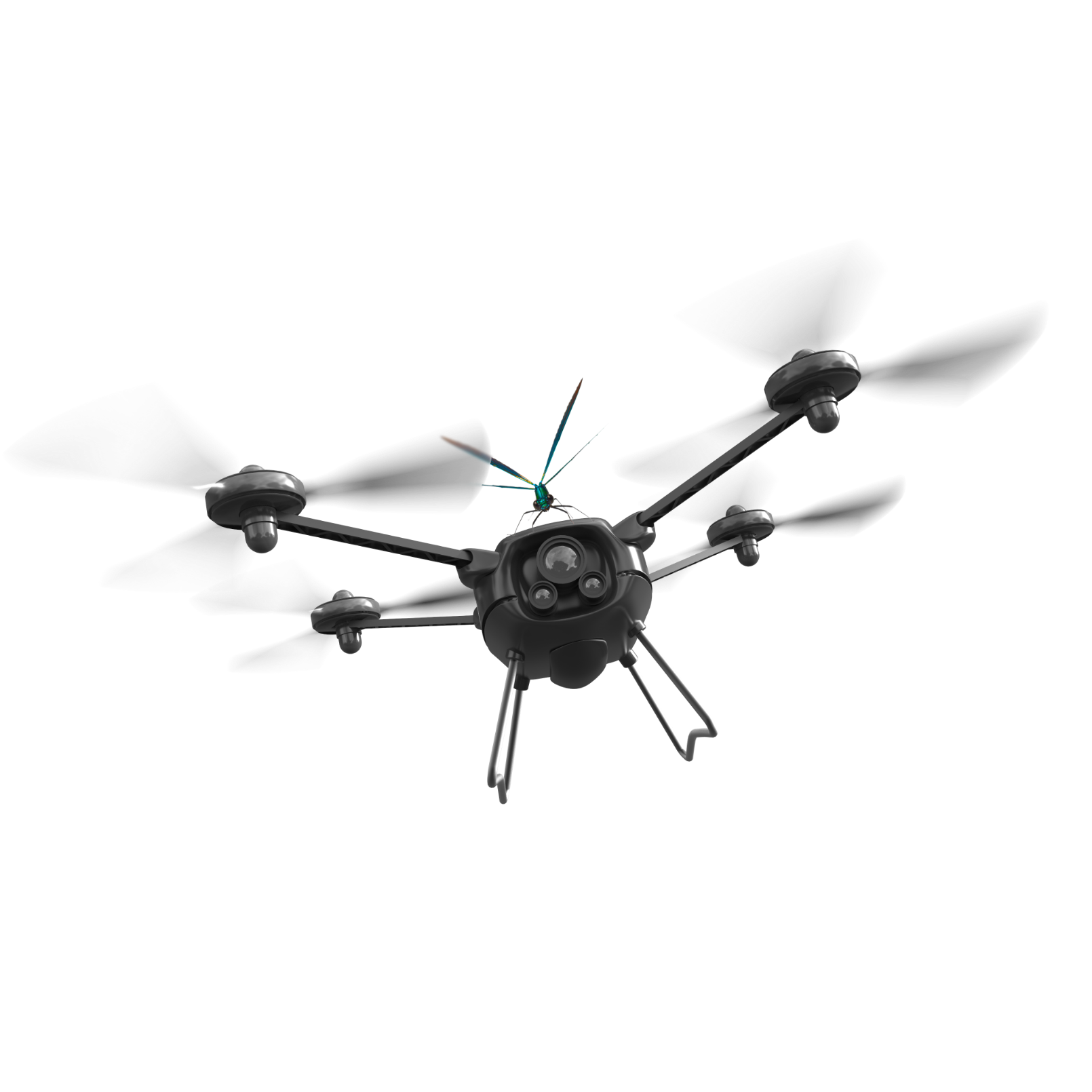

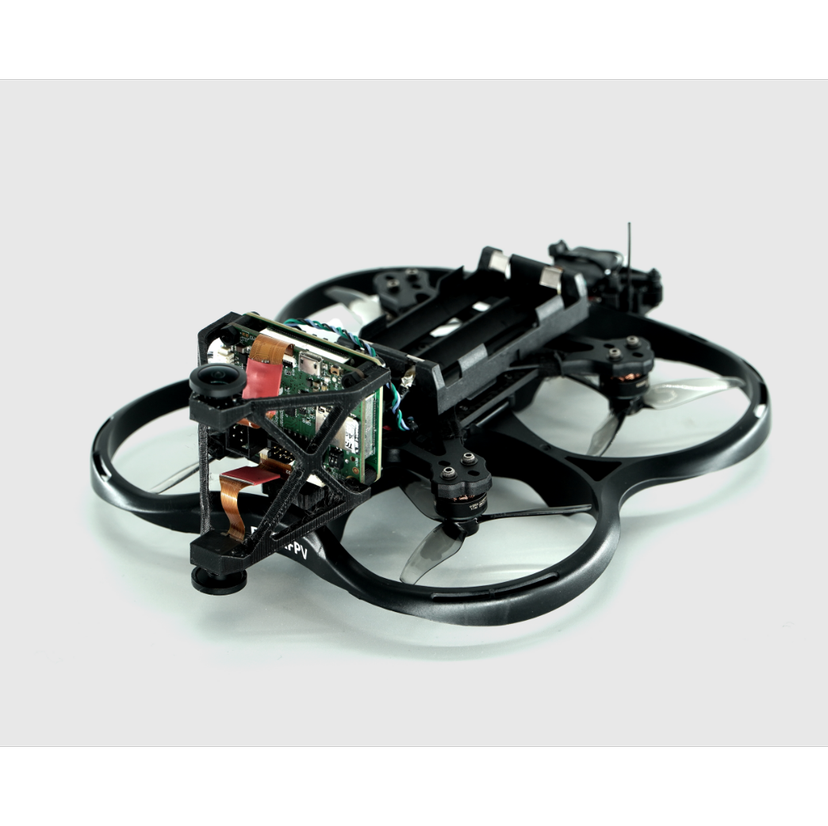

Reference machines

To simplify this, we have pre-integrated the ODK into a drone and ground-based robot to allow you to test the Opteran Mind quickly and simply.